Lecture 9: Improving Training of Neural Networks Part 1 Code

Contents

Lecture 9: Improving Training of Neural Networks Part 1 Code #

#@title

from ipywidgets import widgets

out1 = widgets.Output()

with out1:

from IPython.display import YouTubeVideo

video = YouTubeVideo(id=f"9Z5C-bOMafs", width=854, height=480, fs=1, rel=0)

print("Video available at https://youtube.com/watch?v=" + video.id)

display(video)

display(out1)

#@title

from IPython import display as IPyDisplay

IPyDisplay.HTML(

f"""

<div>

<a href= "https://github.com/DL4CV-NPTEL/Deep-Learning-For-Computer-Vision/blob/main/Slides/Week_4/DL4CV_Week04_Part05.pdf" target="_blank">

<img src="https://github.com/DL4CV-NPTEL/Deep-Learning-For-Computer-Vision/blob/main/Data/Slides_Logo.png?raw=1"

alt="button link to Airtable" style="width:200px"></a>

</div>""" )

Activation functions#

import torch

import numpy as np

import matplotlib.pyplot as plt

x_points = torch.linspace(-10,10,1000)

x_points.shape

torch.Size([1000])

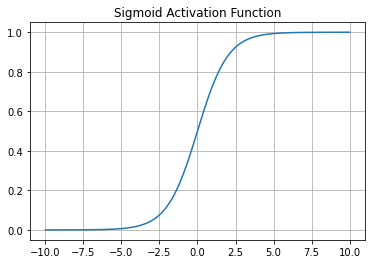

activation = torch.nn.Sigmoid()

plt.plot(x_points,activation(x_points))

plt.title('Sigmoid Activation Function')

plt.grid()

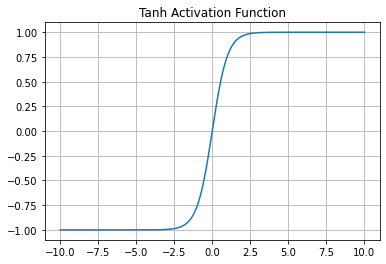

activation = torch.nn.Tanh()

plt.plot(x_points,activation(x_points))

plt.title('Tanh Activation Function')

plt.grid()

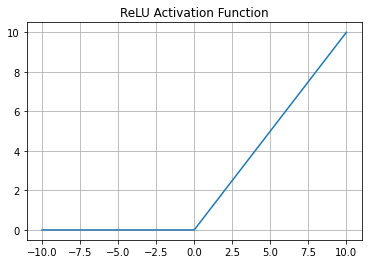

activation = torch.nn.ReLU()

plt.plot(x_points,activation(x_points))

plt.title('ReLU Activation Function')

plt.grid()

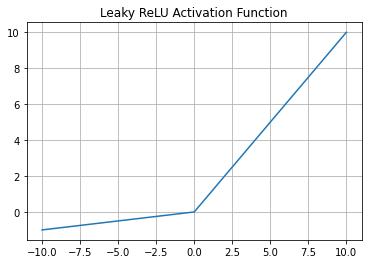

activation = torch.nn.LeakyReLU(negative_slope=0.1)

plt.plot(x_points,activation(x_points))

plt.title('Leaky ReLU Activation Function')

plt.grid()

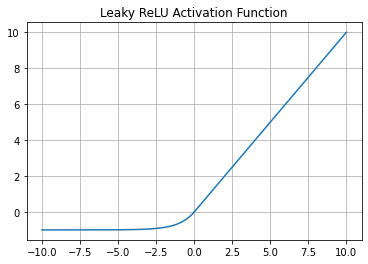

activation = torch.nn.ELU()

plt.plot(x_points,activation(x_points))

plt.title('Leaky ReLU Activation Function')

plt.grid()

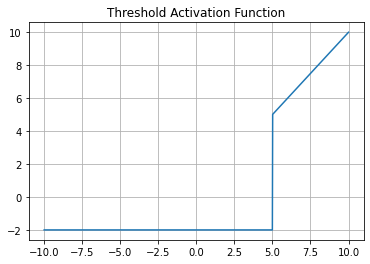

activation = torch.nn.Threshold(5,-2)

plt.plot(x_points,activation(x_points))

plt.title('Threshold Activation Function')

plt.grid()

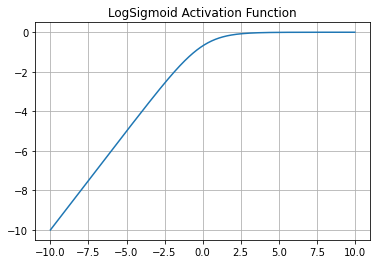

activation = torch.nn.LogSigmoid()

plt.plot(x_points,activation(x_points))

plt.title('LogSigmoid Activation Function')

plt.grid()

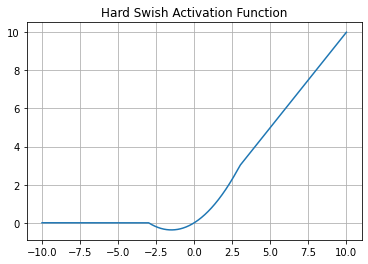

activation = torch.nn.Hardswish()

plt.plot(x_points,activation(x_points))

plt.title('Hard Swish Activation Function')

plt.grid()